SAP Business AI implements Intelligent Enterprise processes with SAP S/4HANA Cloud as central core on hybrid multi-cloud Business Technology Platform platforms like SAP BTP or Microsoft Azure.

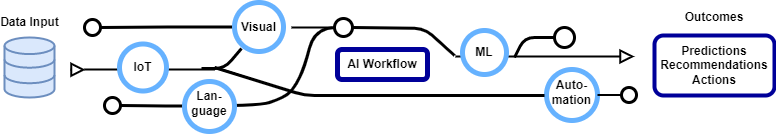

Business AI is focused on AI use-cases to enhance SAP business processes with predictions, data-driven decisions, recommendations or generated new content. These use-cases are rapidly growing, together with new AI technologies or machine learning model types, offered on cloud platforms like SAP Cloud, Microsoft Azure or Amazon AWS.

Based on the deep knowledge of business processes, SAP develops Large Process Models (LPM) which learn from best practices or recommendations and provide AI powered insights as foundation for Generative Business AI solutions. Beyond that, business process analysis with SAP Signavio offers options to optimize Business AI scenarios driven by data insights.

Cloud architectures integrate AI foundation capabilities like Machine Learning, Knowledge Mining, Natural Language Processing and Computer Vision to implement SAP Business AI solutions and use-cases on multi-cloud platforms. Multi-cloud Business AI solutions can be composed of services from different cloud platforms after comparing service prices and model performance.

With great power comes great responsibility. (Spiderman)

Beside great opportunities and power, measures have to be implemented to manage risks of Business AI and to ensure compliance with legal or ethical principles. Responsible AI shall enhance the transparency and comprehensibility of AI processes and outputs like decisions, recommendations or generated new content.

Responsible AI solutions shall identify potential harms as first step, measure harms in the output, mitigate harms e.g. on safety layers with content filters and define plans to operate Business AI solutions responsibly.

Accountability AI principles define that AI systems are responsible for their actions and humans must have the opportunity to override AI decisions. Transparency of AI systems can be encouraged with documented machine learning model algorithms and transformations, to implement visible, understandable and comprehensable AI scenarios.

AI Fairness principles ensure equal treatment by avoiding bias and discrimination based on gender, race, sexual orientation, or religion. Reliability and Safety AI principles ensure that systems perform consistently as intended in unexpected situations.

Privacy and Security principles provide consumers information and controls to protect their personal data. Inclusive AI systems include all people, empower impaired persons and consider all human races.

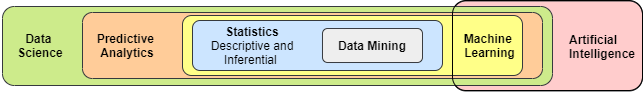

Basic machine learning models are trained on historical data with algorithms based on data science and software engineering techniques to find statistical relationships. Trained inferencing functions make predictions based on probabilities with confidence scores.

General purpose models, like pre-trained foundation models, are applicable for a wide range of use-cases, but they can also be fine-tuned with custom data for individual AI scenarios.

Multi-modal models can process different kind of inputs like text or images and convert those prompts into various outputs.

Combining machine learning models with orchestration workflows enables Business AI solutions with advanced capabilities like Optical Character Recognition (OCR) of business documents followed by Named Entity Recognition (NER).

Natural language processing (NLP) supports capabilities like sentiment analysis, key phrase identification, text summarization, entity recognition or conversational language understanding. These features are based on text analysis and analytics which enable AI solutions to understand written and spoken words.

Text analysis looks backwards into the past. Tokenization techniques break down a text corpus, with collections of documents, into tokens and can be combined with other concepts like normalization, stop word removal or stemming.

Simple frequency analysis counts the occurrences of tokens within one document, in contrast to the Term frequency - inverse document frequency (TF-IDF) statistical method which measures the token importance within a text corpus or collection of documents.

Forward orientated text analytics can be implemented with machine learning models in Conversational or Generative AI solutions.

Generative AI can generate new content for a wide area of AI scenarios often with multi-modal foundation models with multiple input options like text, speech or image.

Generative AI Prompts

Foundation models offer emergent capabilities based on self-supervised training to create labels based on data structures with the opportunity to be applied to many diverse tasks.

NLP use-cases are typically implemented with Large Language Models (LLMs) to process language input and generate text, code or image output. Some Generative AI NLP tasks are classification, sentiment determination, summarization, comparison or generation.

Business Context Grounding

Input prompt instructions, sent to Deep Learning models, can be enriched with grounding techniques to improve outputs of Generative AI solutions.

Prompt engineering and advanced search capabilities are typical options to optimize generates outputs for custom business context without model retraining. Vector search on embedding models is commonly used on different data sources for RAG, similarity search or recommendation scenarios.

Based on vector search techniques, Retrieval-Augmented Generation (RAG) augments prompts for generative AI solutions with information retrieved from custom data sources.

Transformer Models are composed of encoder and decoder components with multiple layers.

Encoders transform language tokens into coordinates within multidimensional vector spaces of semantic language models. The distance of tokens within these embedding models represent their semantic relationship. Embeddings are used for NLP analysis tasks like summarization, key phrase extraction, sentiment analysis with confidence scores or translation.

Decoder are able to generate new text sequences and enable decoder only Conversational or Generative AI solutions.

Attention layers are part of encoder and decoder components. Attention layers within encoder blocks try to quantity the meaning of words within text sequences with weights. Decoder attention layers predict the most probable output token in a sequence.

Traditional transformer model implement encoder and decoder components. Encoder tokenize input text to transform these tokens into embeddings as vector-based semantic and syntactic representations which can be used by encoders to generate new language sequences with determined probable text sequences.

Interaction with Generative AI assistants like Microsoft Copilot or SAP Joule improve digital user experience based on data-driven decisions supported by LLMs. Microsoft Copilot combines ChatGPT LLMs with data provided by Microsoft Graph and offers the option to build custom copilots for various business-specific tasks.

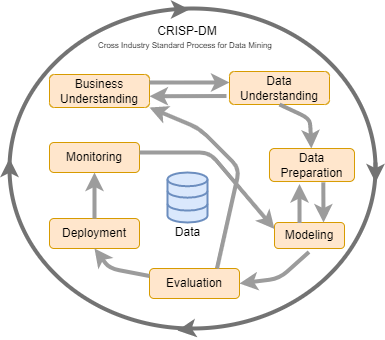

MLOps processes can be organized into phases defined in the CRISP-DM (CRoss Industry Standard Process for Data Mining) process model.

Common MLOps phases are business and data understanding, data modeling and deployment of interference models. In the data preparation phase, data loaded via ETL or ELT pipelines can be splitted up into training and validation sub-sets.

Monitoring phases for deployed machine learning models control the degrade of predictive model performance over time and the impact of changes causes by data values, data distribution or business processes.

Cloud tools and services like SAP BTP AI Core or Azure Machine Learning manage machine learning lifecycles in projects efficiently. MLOps environments handle underlying scalable compute and storage resources to train or serve resource intensive machine learning models and offer development platforms with tools like Jupyter Notebook integrated with popular ML frameworks (e.g. Tensorflow, Scikit-learn).

Data-intensive machine learning workflows can be implemented with tools and frameworks like Metaflow, Argo Workflow and TensorFlow.

Argo Workflows offer custom resources (CRD) on Kubernetes to support direct acyclic graphs (DAG) for parallel processing. Containerized applications can be bundled together with resources as Docker images managed with container registries like Azure ACR or Docker Hub.

Kubernetes is a popular machine learning compute environment with features like service discovery, load balancing within clusters, automated rollouts and rollbacks, self-healing of failing containers and configuration management.

Advanced compute performance enables processing of machine learning algorithms of Large Language Models (LLM) or Computer Vision with tensors as multidimensional arrays. Hyperparameter control the resource requirements with batch size and number of epochs settings.

Batch sizes define the number of samples (single row of data) which have to be processed before the model is updated. Epochs represent complete forward and backward passes through the complete training dataset.

Training with smaller batch sizes require less memory, update weights more frequently, with less accurate estimates of the gradient compared to gradients of full batch trainings.

Gradient descent is an iterative machine learning optimization algorithm which reduces the cost function of model predictions to improve the model accuracy with optimized weights. Gradient descent variants are batch of all samples in single training set, mini batch and stochastic using one sample per step.

Machine learning models learn functions to predict target values for input features with trained algorithms. These decision functions can be trained on data supervised with labeled targets or unsupervised for unknown labels.

Classification and regression are supervised training methods with discrete classes or continuous numerical values as targets.

Binary and multi-class classification have 2 or more possible outputs and assign one input to one targets 1:1. In contrast to multi-label classifications which assign more than one target to one feature 1:n.

Regression algorithms predict one numerical continuous target with one input variable 1:1 or with multiple input features n:1.

Time series regression methods predict sequences of time data points as targets and enable forecast exploration of future time values outside of known data ranges.

Active learning is a supervised learning approach where algorithms select cases for labeling.

Clustering unsupervised training groups similar objects together. Because of low memory requirements, K-Means clustering can be used in Big Data scenarios with various data types.

Unsupervised reinforcement training learns with rewards offered by agents as positive feedback for correct actions.

Deep Learning is a subset of machine learning which simplifies human brain processing with artificial neural networks (algorithms) to solve a variety of supervised and unsupervised machine learning tasks. Deep Learning automates feature engineering and processes non-linear, complex correlations.

Artificial Neural Networks (ANN) are composed of three layers to process tabular data with activation functions and learning weights. Recurrent Neural Networks (RNN) process data step-by-step and are able to make use of sequential information. Convolutional Neural Networks (CNN) are able to extract spatial features in image processing tasks like recognition or classification.

Machine learning offers several optimization options like data preparation, feature engineering, error reduction, performance evaluation and continuous improvements.

Feature engineering tunes hyperparameters which control the learning process until the model outcome meets the business goals.

Feature selection tries to reduce independent input, explanatory variables, without losing relevant information.

Some selection methods are Filters with scores as ranking, evaluating subsets with Wrapper functions or Embedded algorithms which can organize unstructured data as feature vectors. Feature scaling normalizes the range of features datasets.

Typical machine learning model errors are Bias, Variance, Overfitting and Underfitting. To reduce such errors, machine learning models have to recognize systematic patterns of deviation (Bias) and avoid to learn variances which are calculated as average of squared differences of the means to avoid model Overfitting with inability to generalize.

Continuous improvements of deployed machine learning can be realized manually or automatically if models own the ability to learn from data and recalibrate autonomously.

Model performance evaluation measures the quality of machine learning models with graphs, indicators or statistics. Confusion matrices to visualize the performance of classification algorithms, Lift charts, profit matrix and ROC curves are examples of visual evaluation methods.

Accuracy explains how often the model predicts correct, Recall shows how often the model can predict the target class, Precision the proportion of true positive of all positive predictions, the F1 Score combines precision and recall.

Additionally, SAP measures the performance of classification or regression models with two indicators, Predictive Power (KI) for accuracy and Prediction Confidence (KR) to indicate the robustness of a predictive model with new data sets.

AI Document Use-Cases

Typical intelligent AI Document use-cases are automation of manual document processing or information extraction for knowledge mining systems. Document Intelligence extends optical character recognition (OCR) with prebuilt models to process common document types like invoices or receipts with defined formats like for instance PDF, JPEG or PNG.

Deep Learning models enable the extraction of content like text, key-value pairs, selection marks, tables or layout information. Intelligent document services identity field data in unstructured documents and save this data structured into database tables.

Custom document intelligence solutions have to be trained to return bounding boxes for individual document types.

Example AI document solutions or services are SAP Central Invoice Management, Azure Document Intelligence, SAP BTP Business Entity Recognition or SAP BTP Document Information Extraction (DOX).

Computer Vision

Deep Learning algorithms with multi-layered architectures of Convolutional Neural Networks (CNN) enable computer vision services to manipulate and analyse pixels within images. Filters are a common way to perform image processing tasks like highlighting edges of objects with laplace filters.

Object detection and semantic segmentation are two commonly used use-cases of computer vision. Individual locations of items are detected as objects with bounding boxes. Semantic segmentation provides the ability to classify individual pixels in an image depending on the object that they represent.

Knowledge mining enables search capabilities on unstructured data types like text or images by creating search indices. Indexer are crawler which automate data ingestion from data sources like storage, file systems or databases. Enrichment options can be implemented with skill or function sets to extract and enrich data. Knowledge stores persist data generated from AI enrichment.

Cloud environments offers AI services and scalable resources to develop, train, serve and operate machine learning models for Predictive or Generative AI solutions. Tools like Azure AI Studio or SAP AI Launchpad enable the orchestration of containerized compute resources on Kubernetes clusters, the storage of large datasets for training purposes and lifecycle management for training or interference models.

SAP Business AI machine learning capabilities are available as cloud services on the SAP Business Technology Platform (SAP BTP) or embedded into S/4HANA Cloud Core as native SAP HANA libraries. SAP Business AI cloud capabilities can be extended with AI/ML services on Hyperscaler platforms like Microsoft Azure, Amazon AWS and Google Cloud (GCP) platforms.

Some SAP BTP Business AI Services are ready to be used in end-to-end business process automation, business document processing, business recommendation or central invoice management scenarios.

Cloud platforms offer scalable compute environments like Kubernetes clusters to implement development, traing and inferencing of Machine Learning models. ML instance types can be implemented as Kubernetes custom resource definitions (CRD) with node selection and resource settings.

AWS SageMaker, Azure Machine Learning and SAP AI Core offer Kubernetes clusters as compute targets with operators for defined custom resources (CRD). Kubernetes groups cluster resources with namespaces and orchestrates scalable Pods in AI pipelines.

Argo Workflows can be integrated as container native workflow engine with templates synchronized with Git repositories.

Azure offers AI capabilities as multi or single-service resource with RESTful APIs to build applications with AI capabilities. Bundled Azure AI Services require only one key and Url for combined language, vision or search AI solutions.

Azure AI Studios support the complete machine learning lifecycle from data preparation, training, deployment, interferencing and model evaluation with integrated machine learning frameworks such as MLflow. Azure AutoML identifies best algorithms and parameters for specific use-cases automatically.

OCR capabilities are available for documents with the Document Intelligence service ond with Vision AI for images.

Azure AI Search knowledge mining creates a searchable knowledge store from huge amounts of structured or unstructured data. Search indices with schemas are defined as JSON structures.

Document cracking is the first stage in the index creation process which includes the opening of files and extracting content.

Enrichment pipelines integrate built-in skills like OCR, translation or AI Language capabilities into skillsets to provide insights which can be stored in knowledge stores.

Customer-Managed Keys (CMK) require Azure Key Vaults and increase index size with query times.

Azure AI Vision offers services with pre-trained models to realize OCR, Image Analysis, Face and Video Analysis AI solutions.

Optical Character Recognition (OCR) offers text recognition or text extraction capabilities for images. Image analysis returns phrases as image description with confidence scores.

The Microsoft Florence foundation model is a pre-trained general model on which you can build multiple adaptive models for specialized tasks like image classification, object detection, captioning or tagging.

Azure AI Custom Vision is an image recognition service that allows you to build and deploy your own image models to predict labels or detect options with supervised machine learning. Models converted to compact domains can be retrained and exported for offline usage.

Safety system layer includes options for content filters to suppress prompts and responses based on security levels.

Azure AI Language offers language understanding and analyzing features to realize cloud AI solutions with Natural Language Processing (NLP) capabilities. Some of these preconfigured, customizable NLP features are Named Entity Recognition (NER), Personally Identifiable Information (PII) detection, Language detection, Summarization, Key Phrase Detection and Question Answering.

Conversational Language Understanding (CLU) enables the implementation of bot capabilities like intent prediction and important information extraction from incoming utterances.

Workflow orchestration models connect bots with Conversational Language Understanding (CLU) or Question & Answering projects.

AutoML automates iterative machine learning development runs with scoring and ranking by specified metrics. Enabling explain best model ensures that the model meets the transparency principle.

Azure AI Services provide two subscription keys to enable regeneration without service interruptions which can be securely stored in Azure Key Vault. These subscription keys can be accessed by clients to initiate token based authentication.

Azure AI services support Microsoft Entra ID authentication with managed identities or service principals, with the difference that managed identities can only be assigned to Azure resources. System-assigned managed identity are coupled to the lifecycle of their linked resource, in contrast to user-assigned managed identity which exist independently of any single resource.

Role Based Access Control (RBAC) enables least privilege security like restricted rotation of subscription keys with contributor roles.

Network access to Azure AI services can be restricted for selected Azure networks, using private endpoints or with Firewall settings for internet or on-premise access. Private Link connections to private endpoints ensure that traffic remains in the Azure backbone.

SAP Business AI Machine Learning (ML) solutions offer enterprise ready technologies with lifecycle management to deliver trusted outcomes like recommendations or predictions.

SAP Business AI enables data-driven decisions and enterprise automation based on machine learning capabilities like predictions or recommendations. Intelligent Data-to-Value workflows can extend S/4HANA Cloud with SAP BTP data management, analytics and Business AI services.

Artificial Intelligence simulates human intelligence in SAP Business AI processes with knowledge from data learned by Machine Learning (ML) models.

Machine Learning is about training models with input parameters and define functions to inference target values with predictions. Machine learning combines data science and software development to enable Business and Generative AI capabilities embedded into intelligent S/4HANA Cloud processes.

SAP AI Core is the Business AI engine on the Business Technology Platform (SAP BTP) with lifecycle management, multi-cloud storage, scalable compute capabilities and multi-tenancy enabled by resource groups.

The AI Core SDKs integrate Open Source frameworks for AI/ML workflow implementation like Metaflow and Argo Workflow and enable custom implementations of MLOps processes integrated with Git repositories.

The SAP Core AI machine learning models are containerized as Docker images and managed in Docker registries. Kubernetes orchestrates these containers on training and serving clusters. Multi-cloud object stores like AWS S3 or Azure Storage can be integrated to store artifacts like referenced datasets or machine learning models.

Argo Workflows implement Custom Resource Definitions (CRD) to orchestrate workflows on Kubernetes clusters. The workflow template definitions are stored in Git repositories and integrated into CI/CD pipelines. KServe templates define the deployment of the Docker images on training and inference servers.

Machine learning frameworks like TensorFlow2 and Detectron2 offer algorithms to detect objects in images with localization and classification capabilities. SAP AI Core supports TensorFlow neural networks with high-level APIs like Keras with optimized user experience and enhanced scaling options.

Furthermore, SAP AI Core SDK Computer Vision package extends Detectron2 to integrate image processing machine learning (ML) scenarios. Image classification quality can be measured e.g. with the Intersection over Union (IoU) to evaluate the inference accuracy.

SAP S/4HANA Intelligent Scenario Lifecycle Management (ISLM) offers Fiori apps to manage pre-delivered and custom intelligent scenarios embedded based on SAP HANA Machine Learning (ML) Libraries (PAL, APL) and Side-by-Side deployed on the SAP Business Technology Platform (BTP).

Embedded ISLM scenarios are integrated in the ABAP layer with low computing resource requirements and access to SAP HANA Machine Learning Libraries. Typical examples are trend forecasting, classifications or categorizations on structured S/4HANA data.

SAP HANA offers three analysis function libraries (AFL) for data intensive and complex operations.

Built-in functions and specialized algorithms of the Predictive Analysis Library (PAL) require knowledge of statistical methods and data mining techniques. The Automated Predictive Library (APL) automates most of the steps in applying machine learning algorithms. External machine learning framework for building models like Google TensorFlow can be integrated on-premise with the External Machine Learning Library (EML).