This page describes some aspects of hybrid multi-cloud data architectures of SAP Cloud and S/4HANA intelligent enterprises.

Hybrid multi-cloud data management and analytics platforms enable organizations to implement data-driven processes based on data analytics and AI Machine Learning (ML). Cloud data environments have to consider data governance and security requirements together with self-service options for data democratization to empower business users with insights.

Integrated cloud data platforms store, process and orchestrate large distributed data volumes to reduce data silos and avoid monolithic big data lakes.

Metadata driven data governance across heterogeneous systems enables unified enterprise views on data. Data catalogs enable metadata based data access to generate data insights from origin to consumption visualized with data lineage methods.

Modern data warehouses, cloud analytics services and data-driven SaaS applications can be built on data lakes to pre-process loaded data and limit further data processing on subsets with relevant data.

Data Fabric, Data Mesh and Data LakeHouse are modern hybrid multi-cloud data architecture patterns, which can be used in combinination to implement customer specific requirements. Data Fabrics and Data Lakehouse patterns are mainly driven by products or services of vendors, in contrast to Data Meshes as primarily organizational approach.

Data Fabric architectures enable centrally governed data management platforms for distributed data with enterprise wide real-time views on integrated data sources. Data fabric architecture designs are technology-centric and metadata-driven to allow streamlined central and local data processing in hybrid multi-cloud environments.

AI Machine Learning (ML) and low code technologies play a major role to simplify and automate data delivery processes of Data Fabrics. Business users benefit from data democratization with offered self-services.

Data Lakehouses combine elements of data warehouses and data lakes. Data Lakehouses offer ACID transaction support (e.g. with Delta Lake), curated data layer for structured and unstructured data, schema support and data governance and end-to-end streaming for real-time scenarios.

Data Meshes focus on organizational changes to shift responsibilities from central data engineer teams of monolithic data warehouses to domain-driven teams. This decentralized approach eliminates the bottleneck of centralized data teams (engineers and analysts) and enables data engineering embedded in business units with domain expertise, to perform local processing and governance of distributed data.

Cloud Data Ingestion Layers connect with various sources of structured, semi-structured and unstructured data. Data Ingestion Layers support various protocols like ODBC/JDBC to connect with various databases and enable the integration of batch or streaming data sources.

Analytics or AI/ML data ingestion processes are typically performed with three steps: Extract (E), Load (L) and Transform (T) which can be performed with different sequence ELT or ETL.

ELT processing reduces the resource contention on source systems and enhances the architectural flexibility with multiple supported transformations.

Storing all kind of data (structured, semi-structured, unstructured) from hybrid multi-cloud sources in one Cloud Data storage layer, optimizes data management and processing. Cloud Data storage layer shall offer curated data which is organized, consumption-ready and conform with organizational standards and data models.

The Cloud Data Lakehouse concept is one approach to implement this storage layer which can be extended with a Delta Lake curated zone. Data separated into folders (bronze, silver, gold) is reflecting the enrichment status. Delta lakes offer database capabilities with data versioning through ACID transactions and schema support.

Cloud metadata governance layers are built with metadata catalogs. Metadata stores enable unified processing of structured, semi-structured and unstructured data.

Cloud Data compute layers shall be separated from storage layer with independent scalability. Massive Parallel Processing (MPP) capabilities enable to build architectures with high performance. High availability is implemented with Cloud Data compute layers, distributed over multiple availability zones.

Analytic runtimes of Cloud Data processing layers offer access to data with SQL, Spark. SQL queries are unified across structured, semi-structured and unstructured data.

Cloud Data consumption layer shall be compatible with preferred Business Intelligence services.

Data-driven organizations enhance agility and performance with data virtualization which abstracts data access with hybrid multi-cloud data sources. Data virtualization separates storage from consumption and eliminates the need to replicate data. Data Federation is a technology where different virtualized cloud data stored get combined and act as one.

Virtualization enables query processing in source cloud systems which facilitates data management with the available source semantic layer and business context (e.g. organization structure). Virtual live data hasn't to be moved and duplicated, is always up-to-date and can facilitate compliance with company policies like restrictions to data locations.

Data Replication stores data redundant and data queries have to be processed in the target system. Extract Load Transform (ELT) pipelines are typically used to ingest data from an on-premises database to the cloud. ELT reduces the resource contention on source systems and enhances the architectural flexibility with multiple supported transformations.

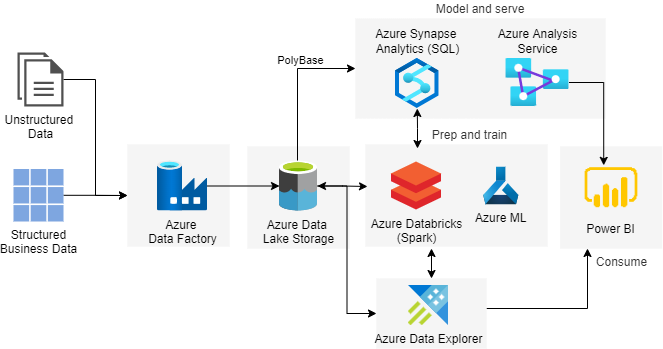

Modern Azure data warehouse platforms can be built on Azure Data Lake with high performant data ingestion to enable analytics BI reporting and data-driven serverless SaaS applications. Efficient data access with hierarchical namespaces on Blob storage enables enterprise big data analytics based on Azure Data Lake Storage Gen2. Data lakes can store huge amounts of frequently used of data for SaaS applications or BI reports as presentation layers.

Azure Data Factory (ADF) data ingestion implements ETL data integration and transformations with scale-out capabilities. The ADF management interface allows to orchestrate multiple activities (processing steps) of data pipelines. Transformations with mapping data flows on serverless infrastructures can be visually designed and monitored.

ADF pipelines perform actions with integration logic like running SQL Server Integration Services (SSIS) packages, which are similar to ADF pipelines. Linked services define required information to link disparate data sources like SAP HANA, S/4HANA, SAP ECC or BW to the data factory with available connectors. Automated uploads can be implemented with copy activities e.g. from Azure Blob storage for reporting to Azure SQL DB.

Azure offers solutions to generate value from data with. data lakes storing unstructured, raw data to be cleaned and structured by data warehouses for analytics or data-driven business processes.

Azure DataBricks Lakehouse architectures combine governance of data warehouses with Spark based big data processing for analytics and machine learning. DataBricks supports Delta Lake with tables for ACID transactions and the Unity Catalog for Data Governance or Discovery. Azure Data Lake Storage credential passthrough is a premium feature which allows storage access without configured service principals.

Azure Synapse Analytics combines data integration, enterprise data warehousing and big data analytics with massive parallel processing (MPP) to run high-performance analytics. The data integration capabilities (pipelines, data flows) are based on a subset of Azure Data Factory features.

Synapse Analytics uses the PolyBase virtualization technology to access external data stored in Azure Blob storage or Azure Data Lake Store via T-SQL language. Data from disparate sources (such as on-premise, IoT) can be aggregated with the data warehouse features.

Azure Synapse Link integrates SQL or Cosmos DB databases with Azure Synapse Analytics to provide near realtime analytical processing (HTAP) on operational data.

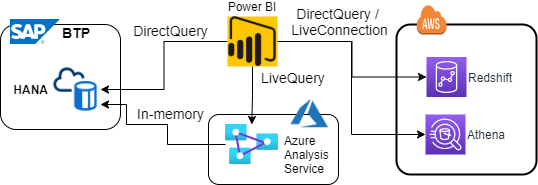

Azure Analysis Services enables companies to implement tabular semantic data models for business users to query data interactively with Power BI which offers integrated Analysis Services premium features. Tabular models of connected databases can run in-memory (default for e.g. SAP HANA, PostgreSQL) or in DirectQuery (to overcome memory limitations for e.g. Azure SQL) mode.

Azure Data Explorer is a fully managed, scalable, high-performance, big data analytics platform, optimized for interactive, ad-hoc queries over various data types (structured, semi-structured, unstructured) ingested into single set data collection.

Some Azure Data Explorer use-cases are log analytics (clickstream data, product logs), time series analytics (e.g. IoT) with built-in capabilities, general-purpose exploratory analytics e.g. on streaming data or scoring of high-quality machine learning models (applying on data) created and managed with Azure ML.

Power BI provides visualization and reporting insights using LiveQueries without datasets or DirectQueries to PowerBI datasets of connected data sources. Data imports enable fast processing with advanced and premium features for large datasets or large scale support.

Some sample connection options for Power BI are DirectQuery to SAP HANA, Amazon Redshift or Athena and LiveQuery to Azure Analysis Services.

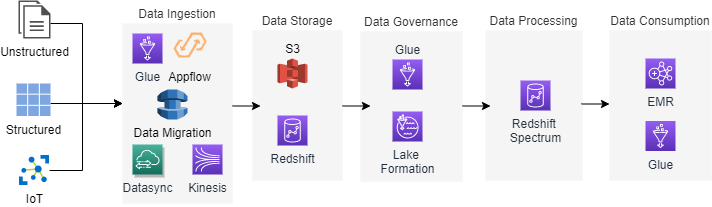

| Layer / Service | Short Description |

|---|---|

| Ingestion Layer | |

| AWS Glue is a serverless data integration service. Glue offers built

in connectors

to common sources like S3, Kinesis, Redshift, Postgres. Furthermore, AWS

Glue allows development of custom Spark, JDBC or Athena based

connectors. Glue crawlers populate the AWS Glue Data Catalog with tables from source data stores. |

|

| Appflow ingests SaaS applications data | |

| Data Migration to ingest data from several operational RDBMS and NoSQL databases | |

| Datasync ingests files from NFS and SMB | |

| Kinesis Firehouse ingests streaming data. Kinesis Data Stream enables building real-time analytics pipelines. | |

| Storage Layer | |

| S3 to store structured, semi-structured, and unstructured data, typically using open file formats. | |

| Amazon Redshift provides petabyte scale data warehouse storage for highly structured data. | |

| Catalog Layer | Discovery and Governance. Layer stores the schemas of structured or semi-structured datasets in Amazon S3 |

| AWS Glue Data Catalog central metadata catalog for entire data landscape | |

| AWS Lake Formation offer a Data Catalog based on Glue. Enables row level security | |

| Processing Layer | |

| AWS SQL based ELT with Redshift Spectrum ELT | |

| Big Data Processing | Apache Spark Jobs |

| AWS EMR Big Data Spark job processing | |

| AWS Glue Spark jobs | |

| Near realtime ETL | |

| Spark Streaming with Kinesis Analytics | |

| AWS EMR Big Data Spark Streaming | |

| AWS Glue Spark Streaming | |

| Consumption Layer | |

| QuickSight is machine learning powered. Generated data models automatically understand the meaning and relationships of business data | |

| Data Virtualization | Athena and Redshift spectrum are serverless services offering virtualization features like Federated Query and User Defined Functions. Both services have native integration with the AWS Glue metadata catalog as well as the Hive Metastor and use SQL to query virtual tables of data stored on S3. Both services provide JDBC drivers. Athena is a standalone interactive service, whereas Spectrum is part of the Redshift stack |

| AWS Redshift in built on a MPP architecture. AWS Glue virtualizes Redshift spectrum data access to S3. External tables define schemas and location of S3 data files. Virtualized tables can be joined with tables in Redshift. Federated Query allows you to run a Redshift query across additional databases and data lakes like S3, Amazon RDS or Aurora. | |

| Athena query results stored on S3, can be loaded into Redshift |

SAP Business Technology Platform provides cloud data and analytics services.

Calculation models are translated into a relational SQL representation whenever possible to execute SQL engine optimization. This unfolding transformation is applied globally and automatically.

The default optimization mode of calculation views is calculation engine. To avoid two optimizations of calculation views within SQL statements, the "Execute in SQL Engine" option overrides the default optimization. This behavior leads to better and more efficient optimization results.

The SQL plan marks partly successful transformations of Calculation Engine as "ceQoPop”.

SAP HANA Cloud is a fully managed, in-memory cloud database as a service (DBaaS). HANA Cloud Service (HCS) instances are deployed as single tenant databases.

HANA Cloud is scalable with regard to compute and data storage independently (memory + disk), vertically (in/out) and horizontally (up/down).

Hana Cloud does not support auto-scaling. Decreasing memory size requires a service request. Furthermore, HANA Cloud cannot scale based on the current workload and also not dynamically to speed up the currently running query.

Main characteristics:

Some HANA Cloud use cases:

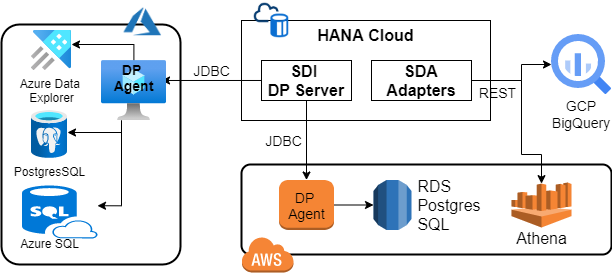

Virtualization, Federation and Replication are supported by HANA Smart Data Access (SDA) and Smart Data Integration (SDI).

Features of natively available HANA SDA adapters :

SDI features:

Depending on the location, the SDI dpagent can use TCP/IP or HTTP (secure SSL enabled).

some SDI Adapters

Main features:

Calculation views are the main HANA graphical design time objects, which will be converted to SQL processible column views at run-time. They can be enhanced with read-only SQLScript procedures and functions. These procedures or functions return tables or scalar values of the view or calculate view input parameters for dynamic placeholders.

Pushing down input parameters as filters, pruning (join, union, column) and parallelization are optimization concepts.

There are three types of calculation views:

Dataflow scenarios can be composed in the Calculation View Modeler with a range of node operations (e.g. join, aggregation) on data sources (like tables, views, functions).

Other modeling features, which can be used standalone or within calculation views:

SAP HANA's holistic security framework comprises user management, authentication, authorization, anonymization, masking, encryption and auditing:

HANA's column-based storage and processing is optimized for on-line analytical processing (OLAP). Column tables enable multi-core parallel processing and fast aggregations.

Row storage is regarded as the optimal storage design for an on-line transactional processing (OLTP).

HANA Cloud column store tables are automatically compressed to reduce size in memory and CPU cache. Compression is applied during the delta merge operation.

Dictionary encoding compression removes the repetition of values in a column. Each distinct value is stored only once in the dictionary store. Integers representing unique dictionary values are addressable with indices. Some further compression techniques are run-length, cluster and prefix encoding.

Columns can be partitioned to improve performance and only columns needed for processing are actually loaded to memory.

Welcome to SAP Cloud and S/4HANA Architecture solutions.

The Integration Solution Advisory Methodology (ISA-M) describes data and analytics integrations. These cloud integrations are part of data-to-value scenarios in hybrid multi-cloud environments.

SAP Data Intelligence is a unified data management solution for data orchestration, with data catalog and integration capabilities. Comprehensive metadata management rules optimize governance and minimize compliance risk.

Some features:

SAP Data Warehouse Cloud is a modeling and data governance environment, built on the HANA Cloud database.

DWC spaces provide isolated virtual environments for

Spaces can represent business domains Data Mesh architectures. They can also be used to access HANA HDI containers with created SQL users.

Some characteristics of the limited Data Flow modeler embedded in SAP Data Warehouse Cloud:

Various DWC integration requirements can be implemented with HANA SDI (Smart Data Integration) and SDA. The HANA SDI DP agent offers system connection options. To connect to S/4HANA Cloud, the DP agent has to be installed on a separate server. Additionally, DWC supports Open Connectors.

S-API extractors are mostly regarded as acquisition of data based on S/4HANA compatibility views or function modules. They are not recommended for virtualization and limited suitable for replication with DWC.

Some DWC connectivity options to Hyperscalers:

SAP Analytics Cloud is a cloud service for:

These SAC features are powered by artificial intelligence and machine learning technologies.

Datasets are data collection suitable for ad-hoc analysis, as basis for stories (embedded or public) and smart predict data sources.

Models allow multiple data sources and planning scenarios.

Data modeling entails e.g.

Data can be accessed with SAC with live connections or after data import. Live connections are supported to cloud (e.g. BTP, S/4HANA Cloud, DWC) and On-premise (like BW, HANA, S/4HANA) data sources. In combination with DWC, there are various other connection options possible.

Data connections can be created for live and acquired data:

All live data connections, except those to DWC, require a proxy live data model. This proxy model tells SAP Analytics Cloud how to query data from source model (HANA calculation view, BW query).

Live connections from SAP Analytics Cloud to Data Warehouse Cloud, in combination with DWC Remote connection options, enable further SAC live connectivity data sources.

SAP Analytics Cloud stories visualize information data views with visualizations e.g. charts, tables, geo maps. Business users can explore data interactively with story views to find insights to make decisions. Both views are based on models defined with dimensions (categories) and measures (quantities) on data.

SAC offers templates (provided by SAP or customized) and predefined story page types (responsive for mobile, canvas, grid, smart discovery form) as starting point for stories.

Smart Predict is an SAP Analytics Cloud feature, that helps analysts answer business questions about future trends. e.g. target sales campaign limited to customers who are most likely to by a specific product.

The coupled date and target value information is called the signal. Signals will be analyzed by Smart Predict Time Series Forecasting.

Search to Insight is a natural language query interface used to query data.

Major use cases for predictive planning are expense & costs, revenue & sales and workforce planning.

Predictive Planning answers questions about "what should happen" based on Smart Prediction ("what can we learn from historical data") and Time Series Forecasting ("what could happen").

The SAP Analytics Cloud Designer SDK enables implementation of

To check whether a query is unfolded you can use the Explain Plan functionality. If all tables that are used in your query appear in your plan, unfolding has taken place successfully

Some features of nested calculation view can block unfolding for the whole calculation view. Execute in SQL Engine option allows you to override the default execution behavior in calculation views.

Performance: plan is translated into an SQL representation, referred to as a "QO" (query optimization) Box in PlanViz output represents plan operator (POP). Prefix define execution engine e.g. CePOP Calculation Engine, BwPOP OLAP Engine