SAP S/4HANA Cloud enables the Intelligent Enterprise with SAP Signavio Business Transformation and emerging technologies on hybrid multi-cloud Business Technology Platform platforms like SAP BTP or Microsoft Azure.

SAP S/4HANA Cloud Business AI and Generative AI solutions are deeply integrated into intelligent end-to-end business processes to realize data-driven decisions and process automations.

Business AI scenarios are built on the analysis and understanding of business processes in various areas like defect detection or predictive maintenance in Design-to-Operate processes.

Modern SAP Business AI and Generative AI solutions require cloud infrastructures with scalable compute and storage resources to develop, train, serve and operate machine learning models.

Leading Business AI cloud platforms like Microsoft Azure, Amazon AWS or SAP BTP offer tools to manage the orchestration of containerized compute resources on Kubernetes clusters, the storage of large datasets for training purposes and lifecycle management for training or interference models.

SAP Business AI machine learning capabilities are available as cloud services on the SAP Business Technology Platform (SAP BTP) or embedded into S/4HANA Cloud Core as native SAP HANA libraries. SAP Business AI cloud capabilities can be extended with AI/ML services on Hyperscaler platforms like Microsoft Azure, Amazon AWS and Google Cloud (GCP) platforms.

Some SAP BTP Business AI Services are ready to be used in end-to-end business process automation, business document processing, business recommendation or central invoice management scenarios.

Generative AI implements Deep Learning for input prompts to generate original content like text, images or code. Multi-modal prompt Generative AI models offer multiple input options like text, speech or image.

Large language models (LLMs) are used by Generative AI to perform modeling tasks like classification e.g. sentiment determination, summarization, comparison or generation.

Traditional transformer model implement encoder and decoder components. Encoder tokenize input text to transform these tokens into embeddings as vector-based semantic and syntactic representations which can be used by encoders to generate new language sequences with determined probable text sequences.

Interaction with Generative AI assistants like Microsoft Copilot or SAP Joule improve digital user experience based on data-driven decisions supported by LLMs. Microsoft Copilot combines ChatGPT LLMs with data provided by Microsoft Graph and offers the option to build custom copilots for various business-specific tasks.

Responsible generative AI solutions include steps to identify potential harms, measure harms in the output, mitigate harms on safety layers with content filters and define plans to operate Business AI solutions responsibly.

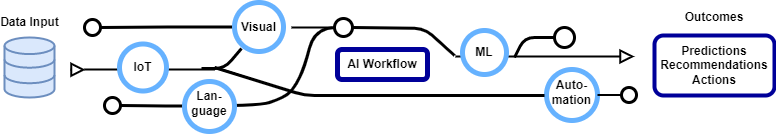

SAP Business AI enables data-driven decisions and enterprise automation based on machine learning capabilities like predictions or recommendations. Intelligent Data-to-Value workflows can extend S/4HANA Cloud with SAP BTP data management, analytics and Business AI services.

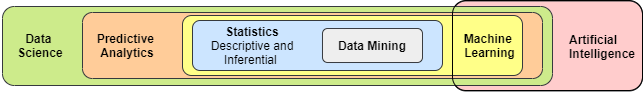

Artificial Intelligence simulates human intelligence in SAP Business AI processes with knowledge from data learned by Machine Learning (ML) models.

Machine Learning is about training models with input parameters and define functions to inference target values with predictions. Machine learning combines data science and software development to enable Business and Generative AI capabilities embedded into intelligent S/4HANA Cloud processes.

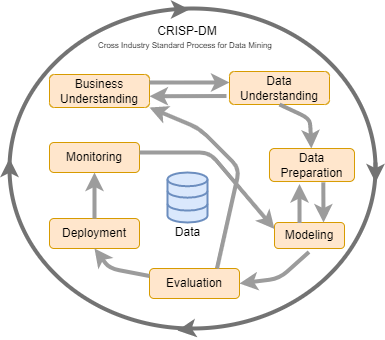

Standards like CRISP-DM (CRoss Industry Standard Process for Data Mining) and framework like Metaflow help data scientists to manage machine learning processes and are the basis to automate MLOps processes in productive environments.

Multidisciplinary data mining or machine learning projects can be organized into phases of business and data understanding, data modeling and deployment of interference models. Monitoring phases for deployed machine learning models control the degrade of predictive model performance over time and changes of data, data distribution or business environment.

Multi-cloud platform services like SAP BTP AI Core, AWS SageMaker Studio, Azure Machine Learning or Google Cloud AI help to manage lifecycle phases of machine learning projects efficiently. These Machine Learning PaaS environments handle underlying scalable compute and storage resources which are required to train or serve resource intensive machine learning models and offer development platforms with tools like Jupyter Notebook integrated with popular ML frameworks (e.g. Tensorflow, Scikit-learn).

Data scientists can implement data-intensive machine learning workflows for productive scenarios with tools and frameworks like Metaflow, Argo Workflow and TensorFlow.

Argo Workflows orchestrates MLOps with custom resources on Kubernetes and supports direct acyclic graphs (DAG) for parallel processing. Containerized applications can be bundled with their resources as Docker images managed with container registries like Azure ACR or Docker Hub.

Kubernetes offers features like service discovery, load balancing within clusters, automated rollouts and rollbacks, self-healing of failing containers and configuration management.

Improved processing capabilities on cloud platforms are the prerequisite for compute intensive machine learning which require high performance Graphical Processing Units (GPU) for parallel processing of machine learning algorithms. GPUs enable processing of Large Language Models (LLM), image or sound files with tensors as multidimensional arrays.

The amount of required compute resources depends on Hyperparameter settings like batch sizes and number of epochs. Batch sizes define the number of samples (single row of data) which have to be processed before the model is updated. Epochs represent complete forward and backward passes through the complete training dataset. Training with smaller batch sizes require less memory, update weights more frequently, with less accurate estimates of the gradient compared to gradients of full batch trainings.

Gradient descent is an iterative machine learning optimization algorithm which reduces the cost function of model predictions to improve the model accuracy with optimized weights. Gradient descent variants are batch of all samples in single training set, mini batch and stochastic using one sample per step.

Azure Machine Learning offers a portfolio of innovative capabilities which can be accessed with REST APIs in applications to implement Business AI and Generative scenarios. Azure AI services is a general resource as bundle of several AI services that enables the access of multiple services with one key and endpoint.

The Microsoft Azure Machine Learning cloud service supports the complete machine learning lifecycle from data preparation, training, deployment to model evaluation with integrated machine learning frameworks such as MLflow. The Automated machine learning (AutoML) capability helps to identify best algorithms and parameters for specific use-cases automatically.

Azure Machine Learning compute instances are part of the development environment and compute target for training or inferencing. Azure ML instance types are implemented as Kubernetes custom resource definitions (CRD) that offer node selection and resource settings.

Microsoft Azure Machine Learning provides prebuilt and customizable machine learning models to implement Business AI or Generative AI solutions and to train custom models. The Microsoft Florence foundation model is a pre-trained general model on which you can build multiple adaptive models for specialized tasks like image classification, object detection, captioning or tagging.

Natural language processing (NLP) with Azure AI Language supports sentiment analysis, key phrase identification, text summarization and conversational language understanding. Azure AI Document Intelligence extends OCR with capabilities to identify field data in documents and to save this extracted data into databases.

SAP Business AI Machine Learning (ML) solutions offer enterprise ready technologies with lifecycle management to deliver trusted outcomes like recommendations or predictions.

SAP AI Core is the Business AI engine on the Business Technology Platform (SAP BTP) with lifecycle management, multi-cloud storage, scalable compute capabilities and multi-tenancy enabled by resource groups.

The AI Core SDKs integrate Open Source frameworks for AI/ML workflow implementation like Metaflow and Argo Workflow and enable custom implementations of MLOps processes integrated with Git repositories.

The SAP Core AI machine learning models are containerized as Docker images and managed in Docker registries. Kubernetes orchestrates these containers on training and serving clusters. Multi-cloud object stores like AWS S3 or Azure Storage can be integrated to store artifacts like referenced datasets or machine learning models.

Argo Workflows implement Custom Resource Definitions (CRD) to orchestrate workflows on Kubernetes clusters. The workflow template definitions are stored in Git repositories and integrated into CI/CD pipelines. KServe templates define the deployment of the Docker images on training and inference servers.

Machine learning frameworks like TensorFlow2 and Detectron2 offer algorithms to detect objects in images with localization and classification capabilities. SAP AI Core supports TensorFlow neural networks with high-level APIs like Keras with optimized user experience and enhanced scaling options.

Furthermore, SAP AI Core SDK Computer Vision package extends Detectron2 to integrate image processing machine learning (ML) scenarios. Image classification quality can be measured e.g. with the Intersection over Union (IoU) to evaluate the inference accuracy.

SAP S/4HANA Intelligent Scenario Lifecycle Management (ISLM) offers Fiori apps to manage pre-delivered and custom intelligent scenarios embedded based on SAP HANA Machine Learning (ML) Libraries (PAL, APL) and Side-by-Side deployed on the SAP Business Technology Platform (BTP).

Embedded ISLM scenarios are integrated in the ABAP layer with low computing resource requirements and access to SAP HANA Machine Learning Libraries. Typical examples are trend forecasting, classifications or categorizations on structured S/4HANA data.

SAP HANA offers three analysis function libraries (AFL) for data intensive and complex operations.

Built-in functions and specialized algorithms of the Predictive Analysis Library (PAL) require knowledge of statistical methods and data mining techniques. The Automated Predictive Library (APL) automates most of the steps in applying machine learning algorithms. External machine learning framework for building models like Google TensorFlow can be integrated on-premise with the External Machine Learning Library (EML).

Supervised machine learning trains models with labeled output for numerical vector input features or instance to find algorithms as decision functions. Classification or categorization like sentiment analysis predict discrete target classes as categories. Binary and multi-class classification algorithms assign exactly one label as target, in contrast to multi-label classification methods which are trained to assign one or more labels to input values.

Linear and Multiple Linear Regression algorithms predict continuous targets like time series or numerical numbers for one respectively two or more explanatory, independent variables called features.

Unsupervised training algorithms try to find unknown pattern in unlabeled data like clustering which groups similar objects to each other. Because of low memory requirements, K-Means clustering can be used in Big Data scenarios with various data types.

Unsupervised reinforcement training learns with rewards offered by agents as positive feedback for correct actions.

Deep Learning is a subset of machine learning which simplifies human brain processing with artificial neural networks (algorithms) to solve a variety of supervised and unsupervised machine learning tasks. Deep Learning automates Feature Engineering and processes non-linear, complex correlations GPU usage.

Artificial Neural Networks (ANN) are composed of three layers to process tabular data with activation functions and learning weights. Recurrent Neural Networks (RNN) process data step-by-step and are able to make use of sequential information. Convolutional Neural Networks (CNN) are able to extract spatial features in image processing tasks like recognition or classification.

Optimization of machine learning training compromises data preparation, feature engineering, error reduction, performance evaluation of model algorithms and continuous improvements.

Data, distributed across multi-cloud environments, has to be loaded via ETL or ELT pipelines. Splitting up loaded input data offers the option to use one sub-set for training and the other sub-set to validate the model performance.

Feature engineering tunes hyperparameters which control the learning process until the model outcome meets the business goals.

Feature selection tries to reduce independent input, explanatory variables, without losing relevant information. Some selection methods are Filters with scores as ranking, evaluating subsets with Wrapper functions or Embedded algorithms which can organize unstructured data as feature vectors. Feature scaling normalizes the range of features datasets.

Typical machine learning model errors are Bias, Variance, Overfitting and Underfitting. To reduce such errors, machine learning models have to recognize systematic patterns of deviation (Bias) and avoid to learn variances which are calculated as average of squared differences of the means to avoid model Overfitting with inability to generalize.

Model performance evaluation measures the quality of machine learning models with graphs, indicators or statistics.

Lift charts, profit matrix and ROC curves are examples of visual evaluation methods.

A confusion matrix evaluates binary classification models with numbers of correct and incorrect predictions. Metrics explain the confusion matrix with propositions of right predictions (Accuracy), true positive to all positive cases (Recall) and true positive of all positive predictions (Precision). Other evaluation metrics are Predictive Power (KI), Prediction Confidence (KR) indicators or F1 Score.

Continuous improvements of deployed machine learning can be realized manually or automatically if models own the ability to learn from data and recalibrate autonomously.